Nobody really knows how the FBI is hacking into iPhones.

Well nobody, except Cellebrite and the FBI themselves.

We can safely assume that the underlying crypto wasn’t hacked–that would be truly catastrophic for everyone’s security, and way above the pay grade of a company like Cellebrite.

So we have to conclude that somehow the FBI has managed to trick the iPhone into giving up it’s encryption keys, or bypassed the Passcode protections on the phone. Apparently the hack doesn’t work on iPhone 5S and higher devices, and obviously this can’t be a software bypass (because all iOS devices literally run the software), so it has to be a hardware limitation, one that probably affects the key storage.

The only difference between the iPhone 5C–which can be broken, and iPhone 5S — which can’t be broken, is the secure enclave, which leads me to believe this is some sort of key extraction that can be performed when keys are stored outside the secure enclave–although I’m no expert here.

When a company like Whatsapp decides to implement end-2-end encryption, they release a detailed whitepaper describing the exact method for which they keys are generated, ledgered and stored. On the other hand, when a company like zipitchat (a subsidiary of celcom) decides to use encryption, they boldly proclaim they’re “absolutely un-hackable“, but provide no proof for such ludicrious claims, nor detail the method in which their keys are generated and stored. Claims like these should never be trusted.

Encryption is hard, not because encrypting is hard–but because it’s hard to securely store the keys. It’s made even harder when you have to store the key and data on the same device, but fortunately, there are some examples of securely doing so.

Using Asymetric keys

The key storage problem can be circumvented if you use asymetric encryption, where the encryption key is different from the decryption key. Well written ransomware, like crypto-locker, receive pre-generated encryption keys from central servers that they use to encrypt files, leaving the asymetric decryption key ‘securely’ on the server. This way, even though there is a key on the victims computer, that key is completely useless when it comes to decrypting the ransomed files.

But asymetric encryption is painfully slow, and implementing it on something like a windows PC and iPhone will incur to big a performance penalty to be ‘consumer’ friendly.

Using user-generated passwords

When you use bitlocker or truecrypt, the encryption key is derived from a secure password you provide the computer when you logon or mount the drive. The moment the computer is turned-off, the memory is flushed and the key is forgotten. So without your knowledge of the secure password, the encrypted data remains safe, since it doesn’t co-habitat the key that encrypts it.

Usually what programs like these do, is turn a user generated password into a key by running it through a some ‘key strengthening’ algorithms, such as PBKDF2. These take generally low-entropy user passwords and pass them through relatively time-consuming functions to generate the necessary high-entropy output to be used for a encryption key.

Iphones do something very similar with your passcodes.

iPhones approach

But with the iPhone, the key is derived from a something a lot less secure than a random password–it’s derived from a simple 4 or 6 digit code (for most people at least). This is trivial to brute-force on most computers, and hence makes the device inherently insecure.

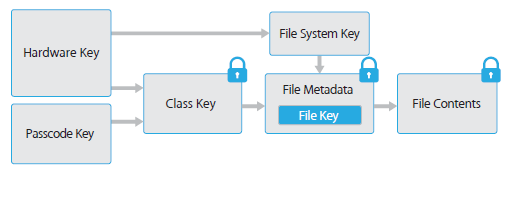

What Apple do to secure this is to ‘complement’ the passcode (which has very little entropy) with a unique device key (which has very high entropy). These two keys are then used to encrypt a further set of keys, which finally encrypt the data on the disk , the primary purpose though is that the security of the phone is reliant on both the user entered passcode and the unique device key.

The problem of course is that the unique device key, which provides the most entropy is on the device itself, and with a little computer forensic techniques, or some clever hack, might be reveal itself and thus break the entire system.

This is very similar to the Truecrypt and Bitlocker approach disk-encryption, with the fundamental difference being that on a laptop or computer you have a keyboard–and a keyboard lends itself better to long/complex passwords. Laptops are also more likely to be used for long-stretches at a time without turning off–smartphones on the other hand are far more likely to be turned off and on, requiring a password entry each time.

Hence a long alphanumeric password is acceptable for laptop use, but not iPhone use.

The problem with physical access

For a long time in computer security, we’ve always lived under the assumption that if the attacker has gained physical access to the computer in question, all bets are off. Apple is working hard to ensure that this assumption will no longer hold true for the next iteration of iOS, and are putting that assumption to a serious test.

But there is already a computer that broke that assumption nearly a decade ago–you quite possibly have it in your pocket right now. It’s your credit card.

Last year, I wrote a piece about how Chip and PIN will be coming to Malaysia at the end of 2016, and credit cards are great examples of crypto-engines that hold secure keys and never give them up.

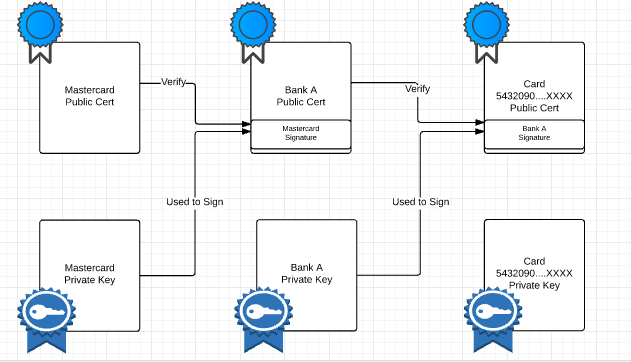

When you insert (or as the British say ‘dip’ ) you card into a card terminal, the card authenticates itself to the terminal by providing it two chained certificates, one that belongs to the issuing bank, and another that is card specific. The terminal uses a root certificate provided by the card scheme (Visa, Mastercard, JCB..etc) to verify these card-provided certificates. Finally the terminal challenges the card to prove that it has the private key corresponding to the card specific certificate.

It’s all very complex, but it’s worked so far, because no one (at least officially) has figured out how to obtain the secret key from the card–but many have figured out easier ways to circumvent the protection.

Credit cards also have fail-safes (just like iPhones), where the chip will completely block all Pin attempts after a certain number of re-tries. Brute-forcing a card to determine the PIN isn’t a feasible option.

The point though is that the hardware chip on the card never reveals it’s encryption keys to anyone, it can only encrypt an input as directed–or validated an already encrypted key (nothing more and nothing less).

Think of encryption as a massive forty foot tall fence that is only one foot wide. It’s much easier to work around then it is to breakthrough, and when you have the keys to the kingdom on a device you physically have in your hand, things get easier–although if Apple has it’s way, easier would still be pretty darn hard.

As challenging as it is to secure a device with the keys inside–it’s even more challenging to secure devices with implicit (or explicit) back-doors. Hopefully we solve this issue before we are forced politically to solve the other.