The Serverless framework (SF) is a fantastic tool for testing and deploying lambda functions, but it's reliance on cloudformation makes it clumsy for infrastructure like DynamoDB, S3 or SQS queues.

For example, if your serverless.yml file had 5 lambdas, you'd be able to sls deploy all day long. But add just one S3 bucket, and you'd first have to sls remove before you could deploy again. This different behavior in the framework, once you introduce 'infra' is clumsy. Sometimes I use deploy to add functions without wanting to remove existing resources.

Terraform though, keeps the state of your infrastructure, and can apply only the changes. It also has powerful commands like taint, that can re-deploy a single piece of infrastructure, for instance to wipe clean a DynamoDB.

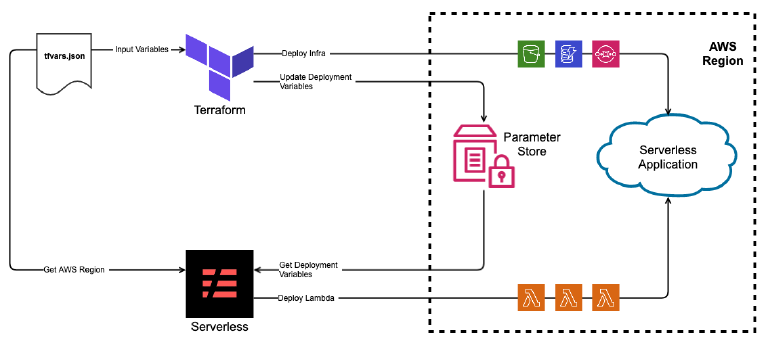

In this post, I'll show how I got Terraform and Serverless to work together in deploying an application, using both frameworks strengths to complement each other.

**From here on, I'll refer to tool Serverless Framework as SF to avoid confusing it with the actual term serverless

Terraform and Serverless sitting on a tree

First some principles:

- Use SF for Lambda & API Gateway

- Use Terraform for everything else.

- Use a tfvars file for Terraform variable

- Use JSON for the tfvars file

- Terraform deploys first followed by SF

- Terraform will not depend on any output from SF

- SF may depend on output from terraform

- Use SSM Parameter Store to capture Terraform outputs

- Import inputs into Serverless from SSM Parameter Store

- Use

workspacesin Terraform to manage different environments. - Use

stagesin Serverless to manage different environments. stage.name ==workspace.name

In the end the deployment will look like this: