Recently I found myself working with an S3 bucket of 13,000 csv files that I needed to query. Initially, I was excited, because now had an excuse to play with AWS Athena or S3 Select -- two serverless tools I been meaning to dive into.

But that excitement -- was short-lived!

For some (as yet unexplained) reason, AWS Athena is not available in us-west-1. Which seemingly, is the only region in the us that Athena is not available on!

And.... guess where my bucket was? That's right, the one region without AWS Athena.

Now I thought, there'd a simple way to copy objects from one bucket to another -- after all, copy-and-paste is basic computer functionality, we have keyboard shortcuts to do this exact thing. But as it turns out, once you have thousands of objects in a bucket, it becomes a slow, painful and downright impossible task to get done sanely.

For one, S3 objects aren't indexed -- so AWS doesn't have a directory of all the objects in your bucket. You can do this from the console -- but it's a snap-shots of your current inventory rather than a real-time updated index, and it's very slow -- measured in days slow! An alternative is to use the list_bucket method.

But there's a problem with list_bucket as well, it's sequential (one at a time), and is limited 'just' 1000 items per request. A full listing of a million objects would require 1000 sequential api calls just to list out the keys in the your bucket. Fortunately, I had just 13,000 csv files, so this part for fast, but that's not the biggest problem!

Once you've listed out your bucket, you're then faced with the monumentally slow task of actually copying the files. The S3 API has no bulk-copy method, and while you can use the copy_object for a file or arbitrary size, but it only works on one file at a time.

Hence copying 1 million files, would require 1 million API calls -- which could be parallel, but would have been nicer to batch them up like the delete_keys method.

So to recap, copying 1 million objects, requires 1,001,000 API requests, which can be painfully slow, unless you've got some proper tooling.

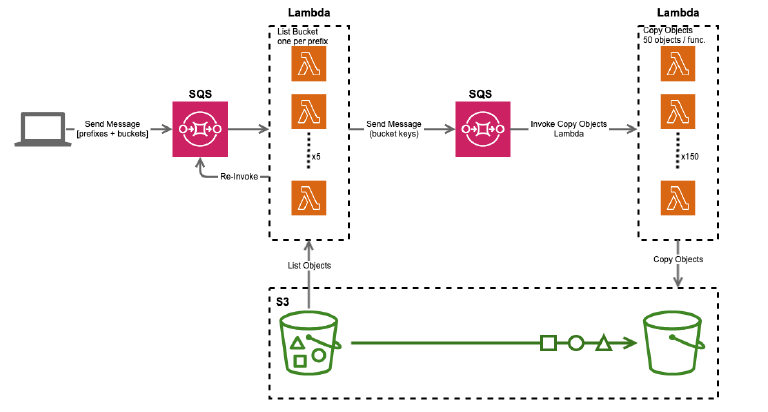

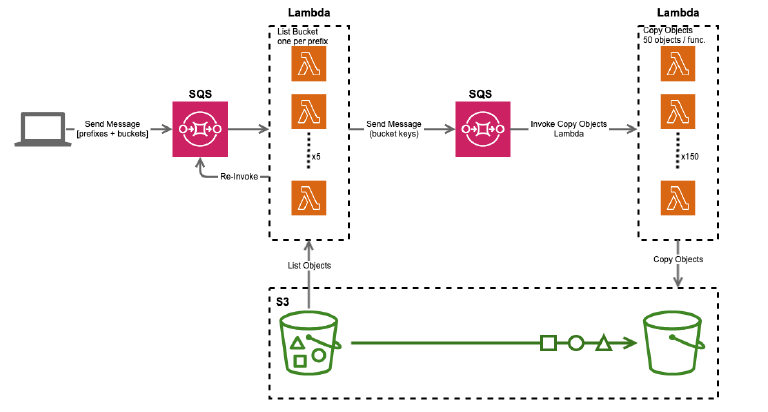

AWS recommend using the S3DistCP, but I didn't want to spin up an EMR server 'just' to handle this relatively simple cut-n-paste problem -- instead I did the terribly impractical thing and built a serverless solution to copy files from one bucket to another -- which looks something like this: