Lambda functions are awesome, but they only provide a single dimension to allocate resources - memorySize. The simplicity is refreshing, as lambda functions are complex enough -- but AWS really shouldn't have called it memorySize if it controls CPU as well.

Then again this is the company that gave us Systems Manager Session Manager, so the naming could have been worse (much worse!).

Anyway....I digress.

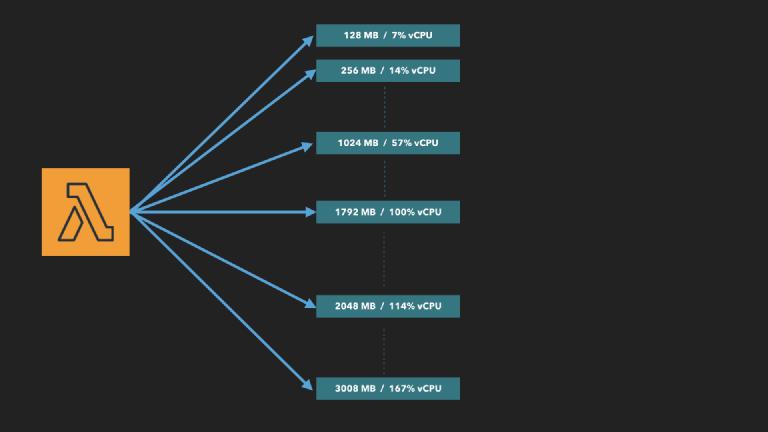

The memorySize of your lambda function, allocates both memory and CPU in proportion. i.e. twice as much memory gives you twice as much CPU.

The smallest lambda can start with minimum of 128MB of memory, which you can increment in steps of 64MB, all the way to 3008MB (just shy of 3GB).

So far, nothing special.

But, at 1792MB, something wonderful happens -- you get one full vCPU. This is Gospel truth in lambda-land, because AWS documentation says so. In short, a 1792MB lambda function gets 1 vCPU, and a 128MB lambda function gets ~7% of that. (since 128MB is roughly 7% of 1792MB).

Using maths, we realize that at 3008MB, our lambda function is allocated 167% of vCPU.

But what does that 167% vCPU mean?!

I can rationalize anything up to 100%, after all getting 50% vCPU simply means you get the CPU for 50% of the time, and that makes sense up to 100%, but after that things get a bit wonky.

After all, why does having 120% vCPU mean -- do you get 1 full core plus 20% of another? Or do you get 60% of two cores?