While doing programming challenges in Advent of Code, I came across an interesting behavior of LLMs in coding assistants and decided to write about it to clear my thoughts.

First some background.

Advent of Code is a series of daily coding challenges released during the season of advent (the period just before Christmas). Each challenge has 2 parts, and you must solve part 1 before the part 2 is revealed. Part 2 is harder than Part 1, and usually requires re-writes to solve. Sometimes quite extensive rewrites, and others they are small incremental steps.

If you haven’t done these challenges before, I encourage you to try. None of them are easy (at least to me), but all of them solvable with enough elbow grease and time.

That said, the challenges are still contrived. Firstly, the questions are much better written that what you’d see in a Jira ticket or requirements document,. They include a detailed description of what must be done, and sample inputs and outputs you can test. Secondly, the challenges extend beyond what most coders do on a daily basis, one challenge required writing a small program to ‘defrag’ a disk, another required building a tiny assembler that ran it’s own program, and multiple questions involved you navigating a 2D maze with obstacles along the way. All fun things you will probably not do as a programmer in the real world.

I took on the challenges, both to improve my coding skills, and to learn how I could use coding assistants like in these close to real-world scenarios. The hope was I would gain some insight into how I could use these tools more effectively should I need to do something more than solving contrived programming challenges before Christmas.

OK. Background complete.

Let’s move onto the challenge that changed the way I would look at LLMs forever.

There are 25 challenges in total, each consisting of 2 parts. Here is a summarized version of the first part of Challenge number 13:

- A resort has an arcade with claw machines.

- Each machine has two buttons, A and B.

- Button A costs 3 tokens, Button B costs 1 token.

- Each button moves the claw a specific amount along the X and Y axes.

- To win a prize, the claw must be positioned exactly above the prize on both axes.

- Each button can be pressed a maximum of 100 times.

- Find the minimum number of tokens for each machine required to win the prize

And example scenario would be the following:

Button A: X+94, Y+34

Button B: X+22, Y+67

Prize: X=8400, Y=5400Pause for a moment and try to construct your strategy for solving this puzzle. Keep the mind the solution will require to solve quite a few of these machines.

Done? Ok, let’s proceed.

This questions is especially beautiful because contains “hints” that are actually nefarious red herrings. But I don’t want to spoil the fun, so let’s go ahead and solve this.

Because we know each button can only be pressed at most 100 times, hence there’s only 10,000 possible combinations per machine. This might sound like a lot, but my 6 year old macbook did this easily. We can construct a simple brute-force solution like this.

We iterate 100 pushes of button A, with 100 pushes of button of B, and find any solution that would get the claw to the location we wanted. Next we calculate the number of tokens required for those solutions, and print out the smallest of those token counts as our final answer.

Easy.

But now let’s go part 2. Which has a twist that makes it more difficult … as always:

- You realize there is a unit conversion error in the position of the prizes

- They’re off by a factor of more 10 million

- Now instead of just 100 presses for each button, each prize will require more than 100 Billion presses

Nested for loops aren’t going to cut it here. Well they could, but you’d be looping through 10 sextillion (!!) iterations for each machine. I’m not a very good programmer, but even I know you should never consider nested loops for numbers that end with ‘illion’.

Take a pause again, and think about how you might solve part 2.

The solution is simple once you realize this is a linear algebra problem, and quite a simple one at that.

We can see this on lines 9 and 10 of our initial solution. There are two equations with variables a_pushes and b_pushes, and since we have 2 variables and 2 linear equations, this is solvable. Personally I like the matrices approach, and Python conveniently has the numpy package, that solves this problem in one line.

Much more performant.

Much more elegant.

Certainly better than the solution we wrote for in part 1.

But …. why did we come up with such a slow solution for part 1 in the first place? I think it was the last 2 lines of the instructions:

- Each button will be pressed a maximum of 100 times.

- Find the minimum number of presses for each machine to win the prize

The first is what I call a pink herring. It helps you in part 1, but sends you wildly off course for part 2. Yes, for part 1 it helped us solve by guiding down the nested for loops, but this was completely untenable for part 2. Had the question not included a maximum number of presses, we might have gone straight to linear algebra requiring no rewrite for part 2.

The second is a particularly nasty red herring. This question only has 1 (and only 1!!) solution. There is no concept of minimum or maximum, because there is only ONE solution. Any code you wrote to choose a minimum from a list of possible solutions was completely and utterly unnecessary.

And here is where we talk about the LLMs in coding assistants.

First off, none of the LLMs in any of the assistants could solve this puzzle in one go (even just part 1). Some came close, requiring minimum tweaking to get it to work for part 1.

Secondly ALL the LLMs used the nested for loop solution like me in their first iteration.

ALL!

Without exception!

Sonnet, GPT-4o and Gemini Flash.

That’s interesting, that the LLMs fall for the same tricks that humans do. But then again, the red herrings ‘prompt’ the LLMs down a certain path, so we shouldn’t be all that surprised.

If you grew up watching Star Trek like me, you know that Data, the Android on the Starship Enterprise is rational, emotionless, and super intelligent. Data wouldn’t be tricked by this. These red herrings are more likely to trick a robot like C3P0, a bewildering buffoon whose code you certainly wouldn’t trust.

I’m not saying the LLMs are like C3PO — but they’re certainly not like Data.

I prompted them again, to improve their code to make it faster:

the solution is too slow, it times out because the real values, the number of presses can be in the millions. Is there a faster way to solve this?

And voila, all of them, manage to identify that this is a Linear Algebra, and surprisingly all 3 had different takes on how to solve them. Each solution worked in the end (after some tweaking), and the problem was solved.

But …. the LLMs still maintained the concept of minimum tokens. Either through the naming of the function/method. Or at least with one of them, it still checked for a minimum value. To me, this just means the LLMs never really ‘understand’ anything, even though they give off the impression of deep understanding.

Any human who understood linear algebra, would remove all mentions of ‘minimum’ in their code once they understood the problem. There is no minimum here, we should not mention it to improve our code.

The Code Assistants really did help me solve these questions faster — but I think we need to be cautious of what help to accept. If you don’t understand linear algebra, having the LLMs write out code that uses it would mean that you would be running code that neither you (nor the LLM) understood well. That’s a recipe for disaster.

LLMs are susceptible to trickery just like humans — so how can we use them more effectively?

System 1 and System 2

A framework I thought was useful to understand comes from psychologist Daniel Kahneman. If Psychology was football, Kahneman would be standing amongst Pele, Maradona, Messi and Ronaldo.

In his book, Thinking, Fast and Slow, he mentions two systems in our brain that act almost exclusively and independently. Which he bestowed the unfortunate names of System 1 and System 2 (reading too much Cat in the Hat?)

System 1 is responsible for quick judgments and decisions based on patterns and experiences. . It’s responsible for automatic activities like detecting hostility in a voice, reading words on billboards, and driving a car on an empty road.

System 2 is slower, more deliberate, and more logical. It’s responsible for complex problem-solving and analytical tasks. It’s responsible for seeking new or missing information, making decisions, and logical and skeptical thinking.

The best way to illustrate this is from the Baseball and Bat question. I’m sure you’ve seen this before:

A baseball and bat together cost $1.10.

The baseball cost $1 more than ball.

How much does the ball cost?

The immediate answer that jumps into your brain is 10 cents ($0.10). But that is the wrong answer. Upon learning this is the wrong answer, most folks can slowly figure out that the correct answer is indeed 5 cents ($0.05).

The first answer of 10 cents, comes from System 1. It’s always on, ready to go, and barrels in with an answer instantly. Once you’re told this is wrong, your mind immediately kickstarts system 2, and system 2 may do a little algebra, or a quick calculation or two, and eventually end up with the correct answer of 5 cents. System 2 is not always on, it’s a finite resource with expensive computational requirements, it needs does some sanity checks on System 1 — and is only ever in full-drive mode when required.

System 1 is easily fooled by red herrings, like find the minimum number of tokens in this problem with only one solution. System 2 is more deliberate and works of first principles — it’s the system that you start up whenever you tell yourself “let’s take a step back”.

Are LLMs purely System 1 automatons?

Well the question has been posed before, and initially (like in GPT3 days) the LLMs would fall for these tricks, but have since been improved. But have they improved to the point that actually are reasoning at a System 2 level?

Or is it still just System 1 with guardrails that prevent them from making silly mistakes on very popular psychology questions? Are LLMs in coding assistants, purely system 1 automatons with hard-coded checks to make them look like System 2?

All the models were able to correctly solve the linear algebra problem once prompted about the solution being slow. They correctly identified the problem, but only after being told to effectively ignore the ‘100 presses’ condition. They still thought there would be a minimum token count (the other red herring we never prompted them to ignore). So red herrings work, until you explicitly tell the model to avoid it.

In other words, the model has no way to figure out if it were a red herring, unless the user explicitly tells it to. It doesn’t have a deep understanding of linear algebra — or even just a high school level understanding of it. It’s brain-dumping.

There’s a brilliant talk by Terrence Tao, where he says this about the LLM models and how they help humans solve complex math’s problems that really resonated with me:

…they’re not solving the problem from first principles, they’re just guessing at each step of the output what is the most natural thing to say next. The amazing thing is sometimes that works, but often it doesn’t

Of course this talk was from GPT4 days, and perhaps things have changed, but I’m yet to see something that actually solves from first principles rather than trying to predict the next token. Just saying “explain your steps” isn’t fundamentally changing the way the model approaches the problem. The underlying model operation is still passing input through a large complex network of vector calculations and getting an output, just because there is ‘reasoning’ doesn’t necessarily mean there is understanding or building from first principles.

After all, System 1 works in about the same way as an LLM — you don’t start every conversation with a plan of action on where you will conclude, you just blurt out the words, and by the time you say something, the next thing pops into your mind and you say that … ad infinitum, that’s System 1.

System 2 is deliberate writing, you start with something, refine it over iterations, ensure the message is clear, and only then publish it out. So can we use an LLM something knowing that’s a sort of System 1 assistant?

Challenge 23

Challenge 23 helped me further clarify my thoughts. Part 1 of that challenge can be summarized as below:

- You’re given a network map of computer connections

- Each connection is represented as two computer names joined by a hyphen (e.g.,

kh-tc) - Connections are undirected (order doesn’t matter)

- Task:

- Find all sets of 3 computers that are fully connected to each other

- Count how many of these sets contain a computer with a name starting with ‘t’

I first attempted to solve this on my own, but with a twist. Recognizing that this was a Graph problem, I looked for popular Graph tools for Python (my programming language of choice). Initially I looked at CogDB, since it was the first search result that made sense. But I soon gave up, while it had promise, the project seemed to be somewhat abandoned.

Adter searching a bit more, I stumbled across this Reddit Post, which suggested I use networkx. I did a little more digging and found rich documentation and a community around the package — so I used it.

After finding networkx, I used the coding assistants to help me write code for it, and in barely 20 minutes, I had managed to solve the challenge. The main logic was solved in under 3 lines of code. Most of the time spent researching the problem and possible solutions — very little was spent actually coding.

What happened next was even more impressive. When Part 2 rolled out, the question then asks to find the maximum size of a clique. This was one extra line to my code. Because I had used an external package that was purpose built over many years to solve Graph problems. What would sometimes take massive re-writes, or performance improvements was solved in just one extra line here.

More importantly, I didn’t need the LLMs to generate large code blocks for me either, I was using code within a high quality package. There was little technical debt generated by this approach.

If you asked the LLMs to solve the problem, they would all barrel down through System 1 and write nested for loops and massive if-else statements to solve it. Developers running on System 1 would do exactly the same thing — start writing code for a problem the instant you see it. The problem here is that even if it solves the problem, now you’ve got 100s of lines of code to maintain and test and validate — when an external battle-hardened opensource solution would have been far better.

Good Great developers, read a problem, understand the requirements, research possible solutions before they even write that first line of code (unless it’s a space they’re extremely familar with). Chances are you’re not them, and just dumping this thing onto a LLM is definitely not the way to go.

Engage your system 2, pose the problem, and suggest possible solutions like an external package that you’re confident will cover your use-case , and you’ll get a much better solution, that is not only more elegant but more maintainable.

The human (at least for now) has to initiate the System 2 behavior. Think about how to solve the problem — perhaps even take your time, knowing the actual coding part (where System 1 takes over) can be substantially automated and accelerated.

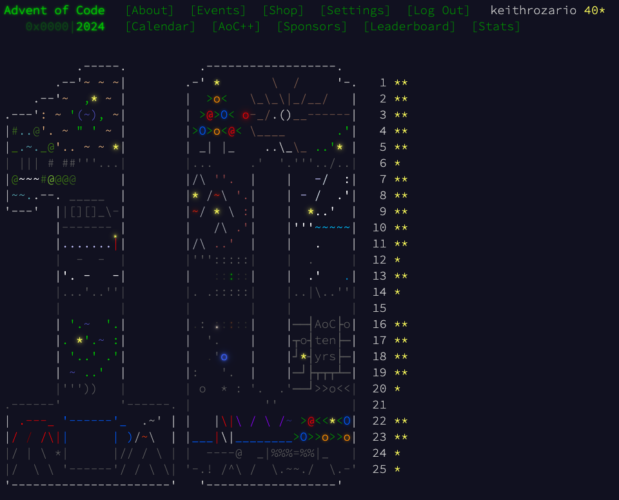

This is all new to me, but I’m now getting the hang of LLMs. I’m not a professional coder, but manage to solve most of the Advent of Code Challenge with help from the coding assistants. Without the assistants I would not have been able to do it — at least not in the same amount of time.

However, I think the best coders will always be the ones who work from first principles. Who are able to decline what the LLM offers, and guide it down the ‘right’ path of code. Everybody else is going to ‘produce’ hundreds or even thousands of lines of code from an LLM, most of which wouldn’t make sense, and even if they work would be unreadable.

I shudder at a world where code is rewritten over and over again, and maintained in a million different places.

Conclusion

Coding assistants are here to stay. At $20-$30 a month, it’s hard to find fault in them. Developers can cost anywhere from $300 – $3000 a day, the ROI for a coding assistant will be something in the order of 1 hour a month, or if you’re really expensive, perhaps even 15 minutes a month. This will certainly pay for itself and more immediately.

However ….. this isn’t a case of free-ing up System 1 so developers can focus on System 2, because that’s not what will happen. There’s a reason System 2 isn’t always engaged, it’s expensive cognition, and a finite resource that has a max hours per day of usage. Just because you have time for System 2 doesn’t mean it can be engaged. But, if developers know that the final writing code part of the solution can be accelerated, they can spend more time engaging the design phase of a problem, confident they’ll hit deadlines with LLM by their side.

So….

Be careful how you use them. Blindly accepting what an LLM outputs out is a recipe for disaster, so too is dumping them a question and hoping for a good solution. The solution has to be coaxed out of the LLM with you as the human being the System 2 thinker.

Sure, someone posted on LinkedIn about how the latest LLM manage to compile a binary that accomplished everything they wanted in a single shot — but there’s millions of people using these assistants. One of them is certain to have that one in a million response — do not expect that this is the norm. People only post exceptional things after all.

To consistently use LLM effectively requires some fine-tuning of the user to understand what the limitations of these things are, and how we can avoid the pitfalls that come with them.

Addendum

I found this beautiful comment on YCombinator that I absolutely had to include here as well:

There’s this concept in aviation of “ahead of or behind the plane”. When you’re ahead of the plane, you understand completely what it’s doing and why, and you’re literally thinking in front of it, like “in 30 minutes we have to switch to this channel, confirm new heading with ATC” and so forth. When you’re behind the plane, it has done something expected and you are literally thinking behind it, like “why did it make that noise back there, and what does that mean for us?”

I think about coding assistants like this as well. When I’m “ahead of the code,” I know what I intend to write, why I’m writing it that way, etc. I have an intimate knowledge of both the problem space and the solution space I’m working in. But when I use a coding assistant, I feel like I’m “behind the code” – the same feeling I get when I’m reviewing a PR. I may understand the problem space pretty well, but I have to basically pick up the pieced of the solution presented to me, turn them over a bunch, try to identify why the solution is shaped this way, if it actually solves the problem, if it has any issues large or small, etc.

It’s an entirely different way of thinking, and one where I’m a lot less confident of the actual output. It’s definitely less engaging, and so I feel like I’m way less “in tune” with the solution, and so less certain that the problem is solved, completely, and without issues. And because it’s less engaging, it takes more effort to work like this, and I get tired quicker, and get tempted to just give up and accept the suggestions without proper review.

I feel like these tools were built without any sort of analysis if they _were_ actually an improvement on the software development process as a whole. It was just assumed they must be, since they seemed to make the coding part much quicker.

I guess the best way to be ‘ahead of the code’ is the be the active human in the loop. Instructing the LLM while maintaining full control of what’s happening. Asking the assistant to provide you hundreds of lines of code without knowing what choices/decisions were made will always put you behind the code.